Mountains of available data can be very confusing. Endless variables, confounding factors, margins of error, and faulty systems make it extremely difficult to draw conclusions. But there’s some good news. A few precautions can allow marketers to uncover valuable insights and move closer to accurate results. Campaign data alone isn’t enough. So here are essential lessons for obtaining the smartest analysis.

1. Check your assumptions

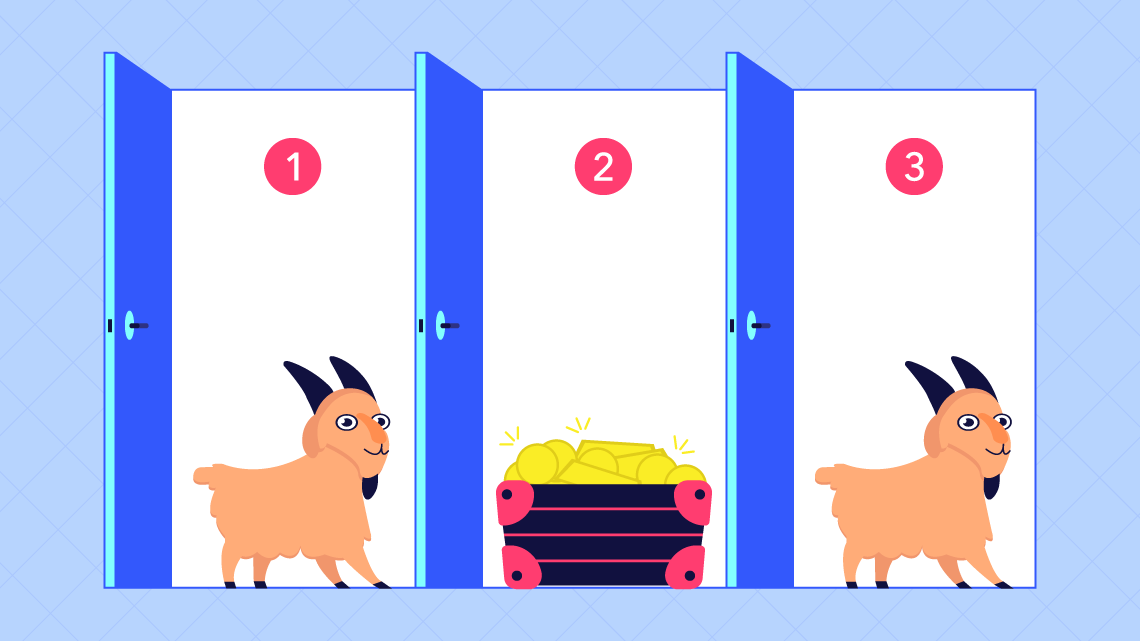

Ever heard of the Monty Hall problem? It comes from the old game show “Let’s Make a Deal”. The show’s set up was simple, there were three doors and only one had a prize behind it. The host (Monty) asked contestants to guess which door. After the contestant chose one, Monty would open one of the remaining two doors to reveal no prize. He then asked the contestant if they would like to change their decision. Would you stick with your original door or switch?

Intuitively, it seems that it wouldn’t matter. Yet statistically, it does. Changing your decision raises your chance of opening the prize door to 66.66%. The initial choice was a ⅓ chance of winning, yet the circumstances changed when one door was eliminated. By reversing your answer, you participate in a new choice with new circumstances and better chances, ⅔ to be exact. This chart helps clarify.

What does this teach us? The importance of continuously reevaluating the assumptions that your test relies on. In the Monty Hall problem, the intuitive assumption was wrong because contestants approached the second decision as a circumstance of independent events, which was no longer the case. To apply this in real-life marketing scenarios, examine factors such as the distribution of data and the residuals for a regression analysis. If these deviate from prior assumptions, it’s time to redesign your test model.

2. Define statistical power

Let’s start with the basics. What’s your sample size? The smaller it is, the more your data is vulnerable to distortion. Of course, larger sample sizes are costly and timely. That’s why particular campaign objectives matter. Smaller sizes are often OK for determining overarching behavior, while larger and longer campaigns demonstrate more incremental changes.

Begin by asking if the test has the potential to determine an effect using a power calculation. In this equation, an effect over a specific size is observed. Researchers typically require 80% or greater. For digital ad campaigns, your threshold doesn’t have to be this significant. Low percentages don’t mean zero effect, sometimes small percentages indicate smaller effects. Calculate yours here.

3. Brace yourself for outliers

Outliers are participants who react in a radically different way, thereby skewing data. These three simple methods can help.

- Univariate method: Examine extreme values for a single variable on a chart.

- Multivariate method: Take into account atypical combinations across the variables.

- Minkowski error: Unlike the others, this is a pretest calculation.

Being able to discount outliers makes your data much more accurate. While your power calculation acknowledges some of the unexpected, outliers should be handled with consistent identification and modification. There very well may be more than one type. Your insights team can do some digging to discover the source or reason for outliers.

4. Repeat after us: correlation not causation

When two items are correlated it’s easy to assume one influences the other — but that’s not necessarily the case. Looking solely at the relationship between two time series can only reliably prove a correlation.

A good example is the Hawthorne Effect. This principle is named after a series of experiments from 1924-1932. The correlation between worker productivity and environmental conditions (i.e. lighting, organization, reshuffling stations) was measured. Near the end of their tests, they realized that productivity fluctuations were not due to the changing conditions. Rather, they were caused by the participants’ knowledge of the experiment.

Certainly, cause and effect can be tricky as there’s frequently a third factor at play. That’s why many conduct a double-blind controlled trial (think A/B tests) to better isolate the factor being tested. The causation of a correlation must exist, so it can be useful to dig deeper in your insights and extend your chain of factors.

5. Second-order effects count (a lot)

Second-order effects are the post-measure outcomes. The impact of your campaign is often measured by immediate responses. Unfortunately, this isn’t a true representation of the results. For instance, say your brand is advertising blue sandals. A consumer sees this ad and it leaves a positive impression. They may visit your website later in the day to see what types of handbags you sell. The consumer might also tell their friends about it or even visit your store in person.

Customer Lifetime Value (CLV) is an important measure for marketers. It refers to the total value of that individual to your brand. These insights takes into account repeated purchases, loyalty, and influence on others. In fact, compared to new customers, existing customers are 50% more likely to try new products and spend 31% more. So even if the immediate value is unclear, there’s always a bigger picture.

Unfortunately, second-order effects are nearly impossible to measure. When time passes after exposure to an ad, the possibility space is so large that there are too many unknown factors. Still, try to set up a KPI that accounts for longer-term responses — even if you can’t account for it all.

Seeking better analytics and insights? Celtra can help. Drop us a line at marketing@celtra.com to learn how we’re transforming digital advertising.